Is all AI created equal? Not quite.

With ChatGPT surpassing 100 million weekly users and enterprise AI adoption projected to reach 78% in 2024 (McKinsey), companies are rushing to rebrand themselves as "AI-powered" on every deck and demo. But going back to our original claim, not all AI is created equal.

Traditional due diligence methods, which work well for predictable technologies such as SaaS, often fall short when evaluating AI companies. The fundamental difference? AI systems are built on learning patterns from massive amounts of data, making them inherently more complex, context-sensitive, and brittle than conventional software.

As an angel investor, it is essential to cut through the noise before investing your capital. That's where AI-specific due diligence comes in - not just to assess the tech but to understand the moat, market, and mindset behind it.

Let’s break down the most important questions to ask when evaluating an AI-based product. These aren’t just checkboxes; they're conversation starters for uncovering the real potential (or red flags).

1. Is the AI being used within its known limitations?

This is perhaps the most critical question that traditional due diligence completely misses. Every AI technology has fundamental limitations, and it's essential to understand these constraints to evaluate the tech’s viability.

All AI models have limitations. Especially large language models (LLMs). They’re powerful, but let’s be clear: LLMs don’t understand language; they generate statistically likely sequences of words. That means hallucinations (made-up facts or logic) aren’t bugs—they’re a fundamental risk.

A 2023 paper published in Springer put it bluntly: “ChatGPT is Bullshit.” It argues that LLMs are pattern matchers, and as such, they’ll always be vulnerable to confident-sounding misinformation.

So, when a startup says their AI can deliver 100% accurate insights or auto-draft client-ready emails without oversight, you should pause. For example, an AI sales company that raised hundreds of millions promised autonomous agents that could handle prospecting and outreach. Within three months, 90% of users dropped off because the agents frequently misused customer information, fabricated meeting notes, and mishandled calls.

This brings us back to the black box problem. If the system can’t explain why it made a decision—or worse, the founders can’t—that’s a liability, not a moat.

Red flags: Vague talk about “proprietary algorithms,” dismissal of model limitations, or claims that the system is “too complex to explain.”

2. How Robust Is Your Data Quality and Where Does It Come From?

Here's a hard truth: AI is only as good as the data it's trained on. Poor data quality can lead to inaccurate predictions, biased outcomes, and costly failures. You need to dig deep into the data sources, collection methods, and quality assurance processes.

Data is the foundation of artificial intelligence. The quality, availability of new data for updates, and potential imbalances in representation within datasets can lead to algorithms that simply don't scale outside their initial context or use case.

Key areas to investigate include data accuracy, completeness, representativeness, and whether the data reflects the diversity of real-world scenarios the AI will encounter.

Pro tip: Ask to see examples of how the system performs with edge cases or unusual data inputs. This will reveal how robust the AI is.

3. What's Your Strategy for Different Markets and Regulatory Compliance?

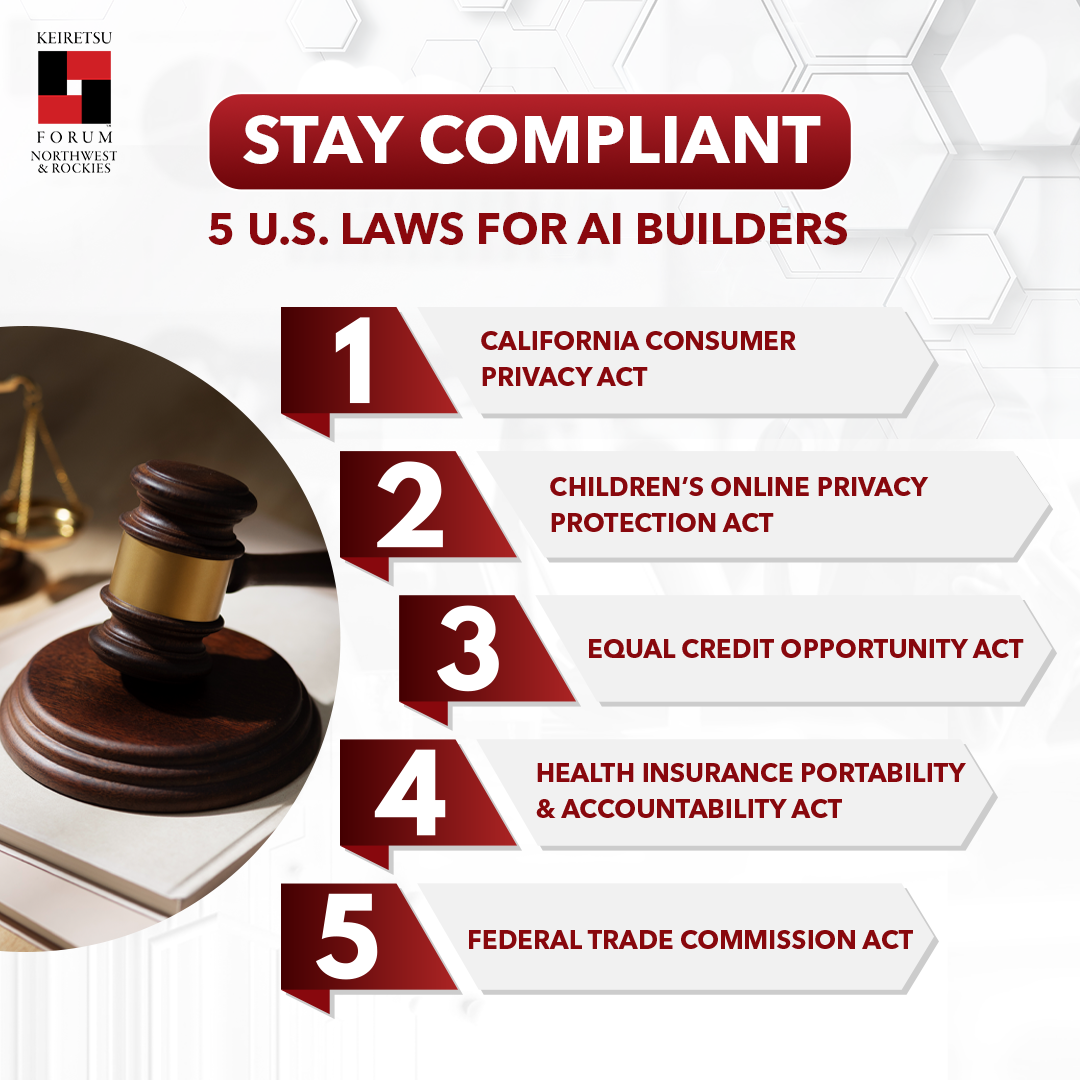

This is where many AI startups fail to think ahead. The regulatory landscape for AI is evolving rapidly and varies dramatically by market. Brazil's AI Act is about 20 times more stringent than the EU's AI Act. From August to September of last year alone, California enacted 17 different AI laws. Four other countries in Africa have passed their own AI Acts.

Consider a children's AI robot that works perfectly in the US market. If the same robot provides advice on specific issues that are acceptable in the US, it could face serious legal consequences when deployed in Saudi Arabia. The technology needs to be adapted not just for different languages or cultures, but for entirely different regulatory and social frameworks.

Even moving from the US to Europe requires extensive documentation, risk assessments, and compliance with GDPR, the Digital Markets Act, and the Digital Services Act. TikTok had to remove its app from the French app store simply because it had not submitted a proper risk assessment.

Look for evidence of responsible AI practices, including regular audits, ethics reviews, and clear escalation procedures for when things go wrong. The organization should have designated roles and responsibilities for AI oversight, in addition to technical development.

4. How Do You Handle AI System Failures and Model Updates?

Unlike traditional software, where you can simply update a line of code and push a fix, AI systems require a completely different repair process. When an AI system starts operating incorrectly, you first need to recall the model, essentially stopping people from using it. Then, you must use interpretability techniques to understand why it's making incorrect decisions, collect sufficient data to retrain it, and ensure it doesn't learn unwanted patterns in the process.

This repair process is highly complex and resource-intensive. Even Google, as one of the world's leaders in AI technology, finds this challenging. For an early-stage and cash-strapped business, managing this process can be nearly impossible without proper planning.

Understanding the maintenance requirements helps you budget for ongoing costs and ensures you're not left with an outdated system that degrades over time. Ask about their model recall procedures, retraining processes, and how they prevent overfitting during updates.

5. What Happens If the AI Makes a Wrong Decision?

This is the million-dollar question. Every AI system will make mistakes; the key is having robust processes for detecting, correcting, and learning from errors. Ask about error detection mechanisms, rollback procedures, and liability frameworks.

For investor due diligence, this also means understanding the startup's insurance coverage and legal protections related to AI issues.

AI Due Diligence Is About Risk Management, Not Just Innovation

As AI continues to transform industries, conducting thorough due diligence isn't just good practice; it's essential for survival. The methods currently used for due diligence on AI companies are insufficient to address the technological, deployment, and market fit challenges that AI presents.

Remember, AI due diligence is an ongoing process, not a one-time check. As one expert noted, "AI should always serve as a tool to support expert analysis, not replace it". The same principle applies to due diligence. Use these questions as tools to support your decision-making, but don't forget the importance of human judgment and expertise.

The AI revolution is here; make sure you're prepared to navigate it successfully.

References:

https://redblink.com/ai-due-diligence/

https://aijourn.com/ai-and-tech-due-diligence/

https://www.mckinsey.com/capabilities/quantumblack/our-insights/the-state-of-ai